Silencing Spark's Most Annoying Warning

Stop Spark warning that it truncated the query plan

November 14, 2022

Apache Spark is a chatty application; it logs early and often. Sure, some log messages increase Spark’s observability, but others seem questionable. Like logging query plans, which can add over 1MB of text to a single log message.¹

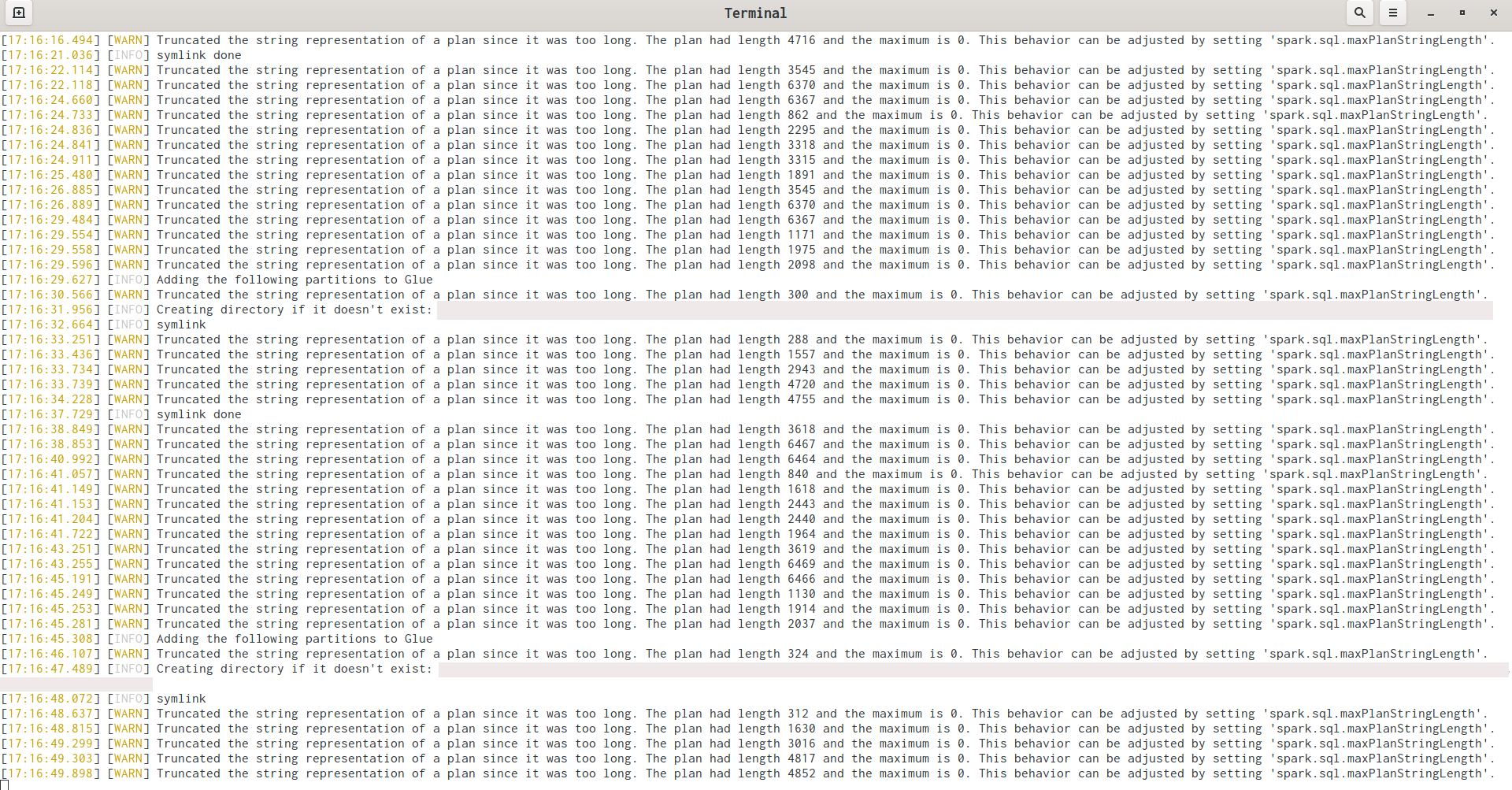

Writing enormous log messages causes issues with our logging pipeline, so at $work we set the Spark config option spark.sql.maxPlanStringLength to 0. This stops Spark from logging query plans. However it has an unfortunate consequence:

I understand the intention, but this is silly. If I’ve set the limit to zero, every query plan is going to exceed it.

The “truncated” warning actually comes in 2 flavors:

Truncated the string representation of a plan since it was too long. The plan had length ${length} and the maximum is ${maxLength}. This behavior can be adjusted by setting ‘spark.sql.maxPlanStringLength’.

And:

Truncated the string representation of a plan since it was too large. This behavior can be adjusted by setting ‘spark.sql.maxPlanStringLength’.

A quick fix for this is to raise the priority threshold of the relevant log4j loggers:

log4j.logger.org.apache.spark.sql.catalyst.util.StringUtils=ERROR

log4j.logger.org.apache.spark.sql.catalyst.util=ERROR

This works on Spark 3.x. As this is the only warning emitted by these loggers, raising their threshold to error doesn’t block other warnings.

It would be nice if a future version of Spark stopped logging query plans altogether, but this might be inconvenient for some users. A backwards-compatible solution would be to add a new configuration option which disables logging of query plans. This declarative approach avoids the implicit disabling of setting spark.sql.maxPlanStringLength to 0, and the need to warn about it in case of user error.

Notes

- In practice. In theory they can be much longer as the default plan length string limit is 2^31-16 bytes (2 GB).